Click to learn more about author Emilio Silvestri.

To match the increasing number of organizations turning to cloud repositories and services to attain top levels of scalability, security, and performance, our company provides connectors to a variety of cloud service providers. We recently published an article about KNIME on AWS, for example. Continuing with our series of articles about cloud connectivity, this blog post is a tutorial introducing you to our company on Google BigQuery.

BigQuery is the Google response to the big data challenge. It is part of the Google Cloud Console and is used to store and query large datasets using SQL-like syntax. Google BigQuery has gained popularity thanks to the hundreds of publicly available datasets offered by Google. You can also use Google BigQuery to host your own datasets.

Note: While Google BigQuery is a paid service, Google offers 1 TB of queries for free. A paid account is not necessary to follow this guide.

Since many users and companies rely on Google BigQuery to store their data and for their daily data operations, our Analytics Platform includes a set of nodes to deal with Google BigQuery, which is available from version 4.1.

In this tutorial, we want to access the Austin Bike Share Trips dataset. It contains more than 600k bike trips during 2013-2019. For every trip, it reports the timestamp, the duration, the station of departure and arrival, and information about the subscriber.

In Google: Grant Access to Google BigQuery

In order to grant access to Google BigQuery:

1. Navigate to the Google Cloud Console and sign in with your Google account (i.e., your Gmail account).

2. Once you’re in, either select a project or create a new one. Here are instructions to create a new project, if you’re not sure how.

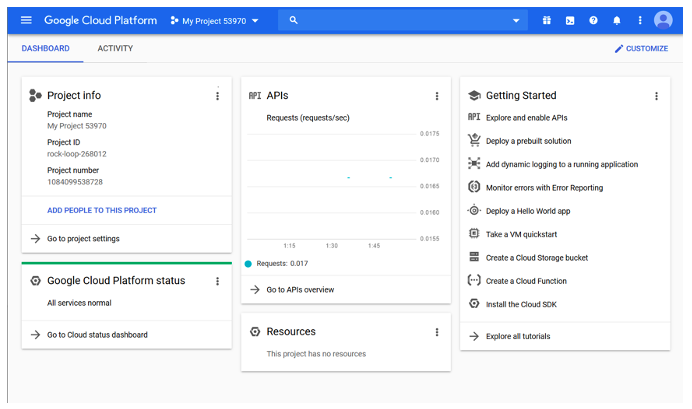

3. After you have created a project and/or selected your project, the project dashboard opens (Fig. 1), containing all the related information and statistics.

Now let’s access Google BigQuery:

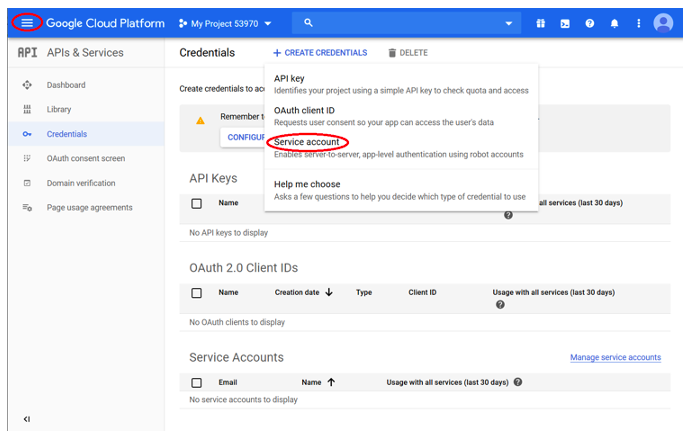

1. From the Google Cloud Platform page click the hamburger icon in the upper left corner and select “API & Services > Credentials.”

2. Click the blue menu called “+Create credentials” and select “Service account” (Fig. 2).

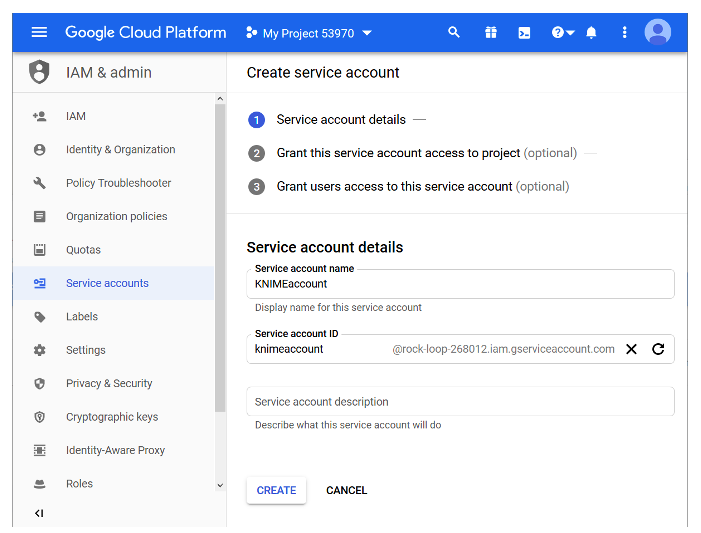

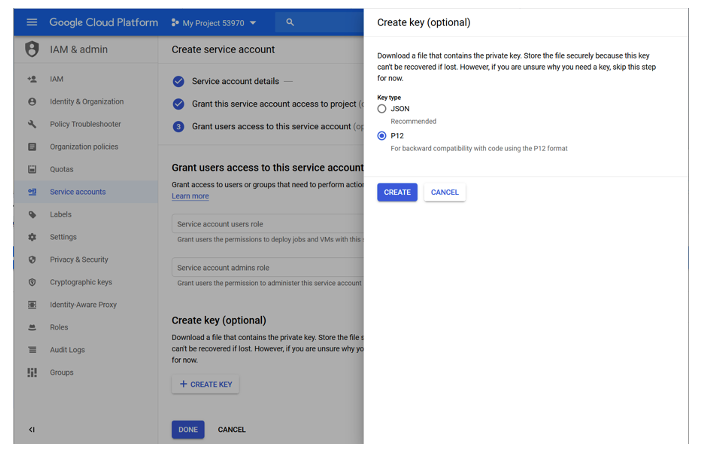

Now let’s create the service account (Fig. 3):

1. In the field “Service account name” enter the service account name (of your choice).

- In this example we used the account name “KNIMEAccount.”

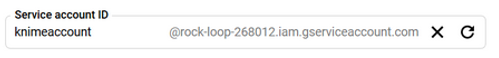

2. Google now automatically generates a service account ID from the account name you provide. This service account ID has an email address format. For example, in Figure 3 below you can see that the service account ID is: knimeaccount@rock-loop-268012.iam.gserviceaccount.com

- Note: Remember this Service Account ID! You will need it later in your workflow.

3. Click Create to proceed to the next step.

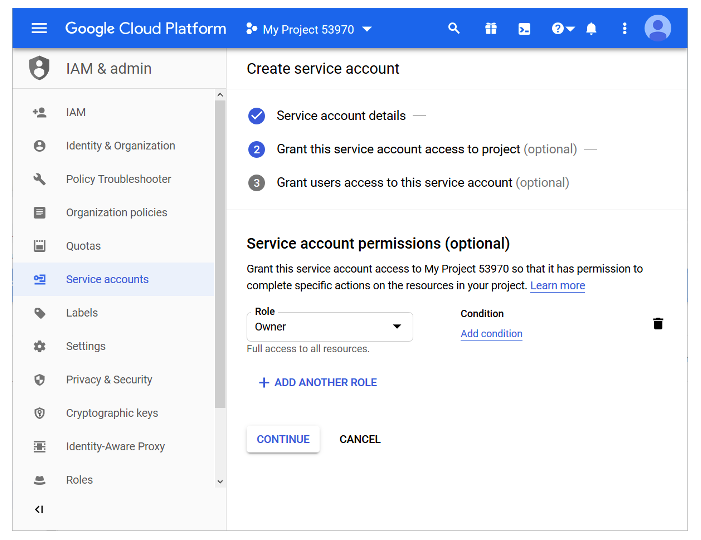

4. Select a role for the service account. We selected “Owner” as the role for our account.

5. Click Continue to move on to the next step.

6. Scroll down and click the Create key button.

7. In order to create the credential key, make sure that the radio button is set to P12. Now click Create.

8. The P12 file, containing your credentials. is now downloaded automatically. Store the P12 file in a secure place on your hard drive.

Connect to Google BigQuery

Uploading and Configuring the JDBC Driver

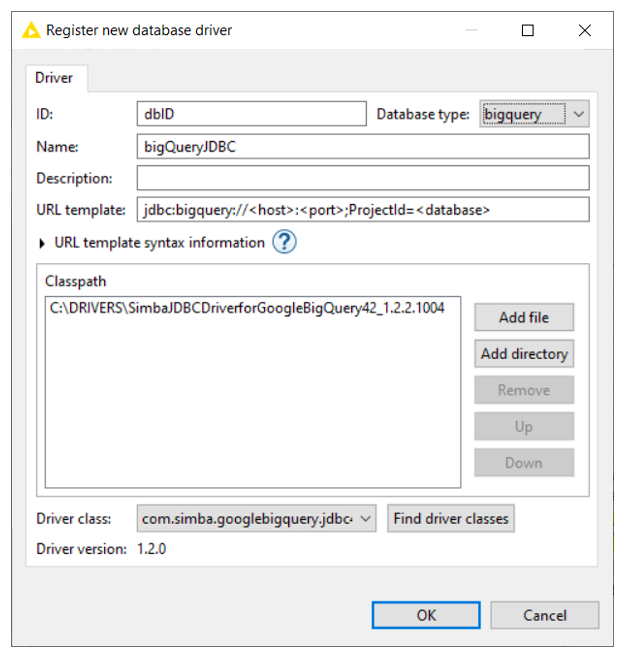

Currently, in version 4.1 of our Analytics Platform, the JDBC driver for Google BigQuery isn’t one of the default JDBC drivers, so you will have to add it.

To add the Google BigQuery JDBC Driver:

1. Download the latest version of the JDBC driver for Google BigQuery, freely provided by Google.

2. Unzip the file and save it to a folder on your hard disk. This is your JDBC driver file.

3. Add the new driver to the list of database drivers:

- In the Analytics Platform, go to File > Preferences > KNIME > Databases and click Add.

- The “Register new database driver” window will open (Fig. 4).

- Enter a name and an ID for the JDBC driver (for example, name = bigQueryJDBC and ID=dbID)

- In the Database type menu, select bigquery.

- Complete the URL template form by entering the following string: jdbc:bigquery://<host>:<port>;ProjectId=<database>

- Click Add directory. In the window that opens, select the JDBC driver file (see item 2 of this step list).

- Click Find driver class, and the field with the driver class is populated automatically.

- Click OK to close the window.

4. Now click Apply and close.

Extracting Data from Google BigQuery

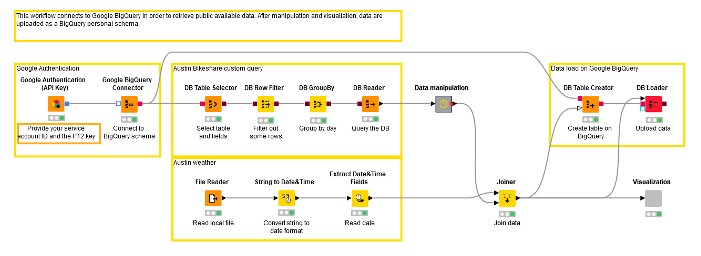

We are now going to start building our workflow to extract data from GoogleBigQuery. In this section we will be looking at the Google Authentication and Austin Bike Share customer query parts of this workflow:

We start by authenticating access to Google: In a new workflow, insert the Google Authentication (API Key) node.

How to Configure the Google Authentication (API Key) Node

The information we have to provide when configuring the node here is:

- The service account ID, in the form of an email address, which was automatically generated when the service account was created; in our example it is:

- And the P12 key file

Now that we have been authenticated, we can connect to the database, so add the Google BigQuery Connector node to your workflow.

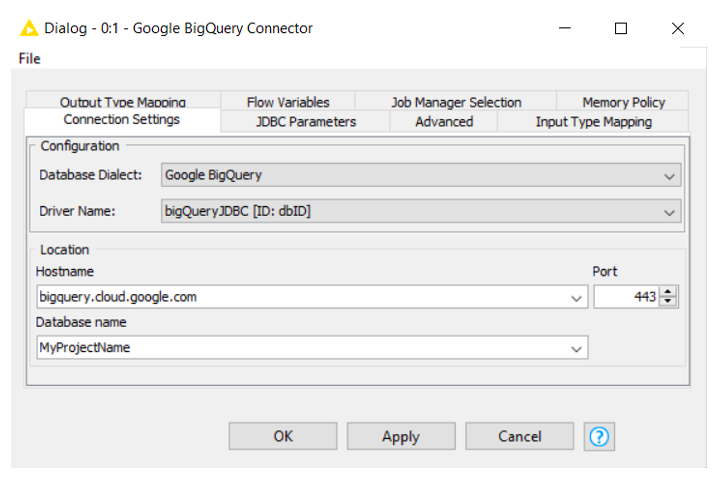

How to Configure the Google BigQuery Connector Node

1. Under “Driver Name” select the JDBC driver, i.e., the one we named BigQueryJDBC.

2. Provide the hostname. In this case we’ll use bigquery.cloud.google.com, and the database name. As database name here, use the project name you created/selected on the Google Cloud Platform.

3. Click OK to confirm these settings and close the window.

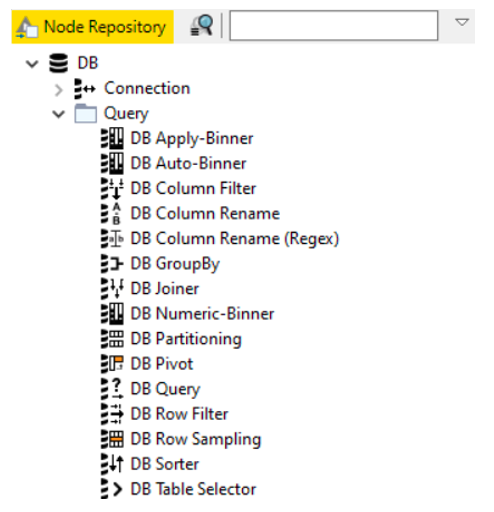

BigQuery has essentially become a remote database. Therefore, we can now use the DB nodes available in our Analytics Platform. In these nodes, you can either write SQL statements or fill GUI-driven configuration windows to implement complex SQL queries. The GUI-driven nodes can be found in the DB -> Query folder in the Node Repository.

Now that we are connected to the database, we want to extract a subset of the data according to a custom SQL query.

We are going to access the austin_bikeshare trips database within a specific time period.

Let’s add the DB Table Selectornode, just after the BigQuery Connector node.

How to Configure the DB Table Selector Node

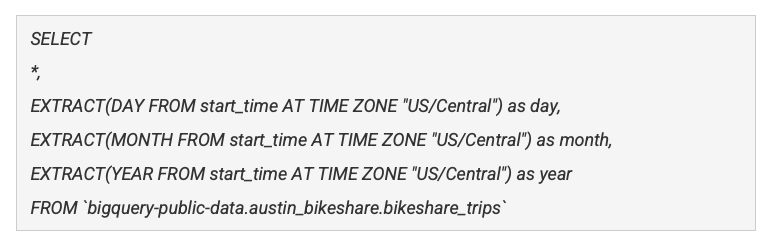

Open the configuration, click Custom Query, and enter the following SQL statement in the field called SQL Statement:

Basically, we are retrieving the entire bikeshare_trips table stored in the austin_bikeshare schema, which is part of the bigquery-public-data project offered by Google. Moreover, we already extracted the day, month, and year from the timestamp, according to the Austin timezone. These fields will be useful in the next steps.

Remember: When typing SQL statements directly, make sure you use the specific quotation marks (“) required by BigQuery.

We can refine our SQL statement by using a few additional GUI-driven DB nodes. In particular, we added a Row Filter to extract only the days in [2013, 2017] year range and a GroupBy node to produce the trip count for each day.

Finally, we append the DB Readernode to import the data locally into the workflow.

Uploading Data Back to Google BigQuery

After performing a number of operations, we would like to store the transformed data back on Google BigQuery within the original Google Cloud project.

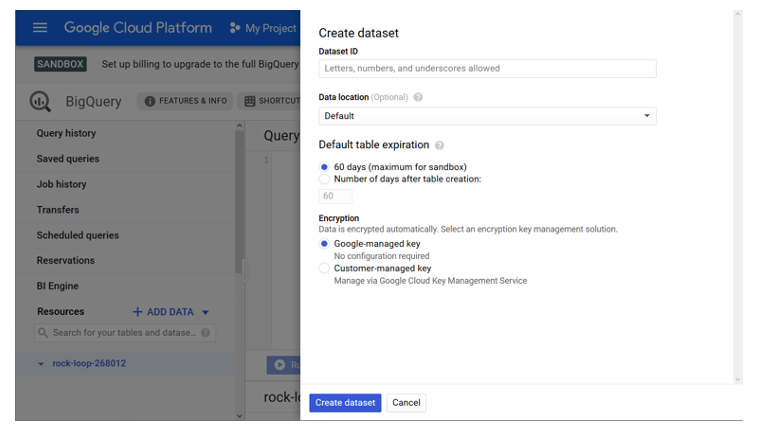

First, create a schema where the data will be stored.

1. Go back to the Cloud Platform console and open the BigQuery application from the left side of the menu

2. On the left, click the project name in the Resources tab.

3. On the right, click Create dataset.

4. Give a meaningful name to the new schema and click Create dataset. For example, here we called it “mySchema” (Fig. 9).

- Note that, for the free version (called here “sandbox”), the schema can be stored on BigQuery only for a limited period of 60 days.

In your workflow now, add the DB Table Creator node to the workflow and connect it to the Google BigQuery Connector node.

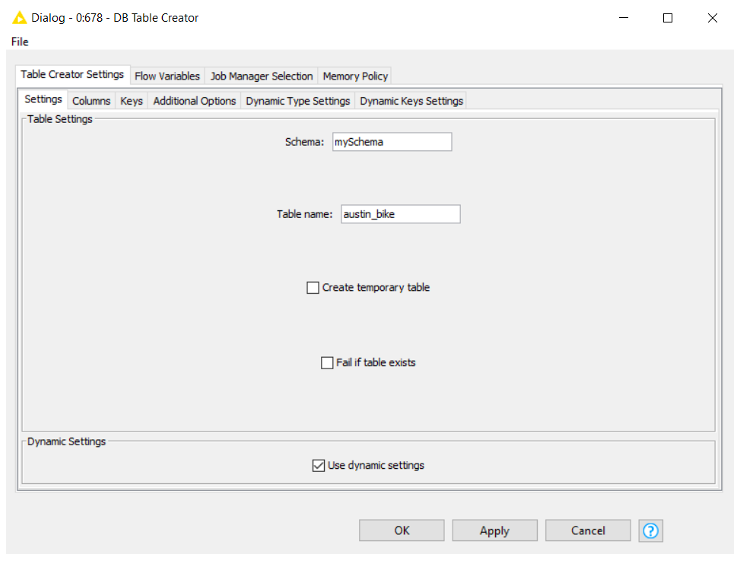

How to Configure the DB Table Creator Node

1. Insert the name of the previously created schema. As above, we filled in “mySchema.”

2. Provide the name of the table to create. This node will create the empty table where our data will be placed, according to the selected schema. We provided the name “austin_bike.”

- Note: be careful to delete all the space characters from the column names of the table you are uploading. They would be automatically renamed during table creation and this will lead to conflict, since column names will no longer match.

Add the DB Loader node and connect it to the DB Table Creator and the table whose content you want to load.

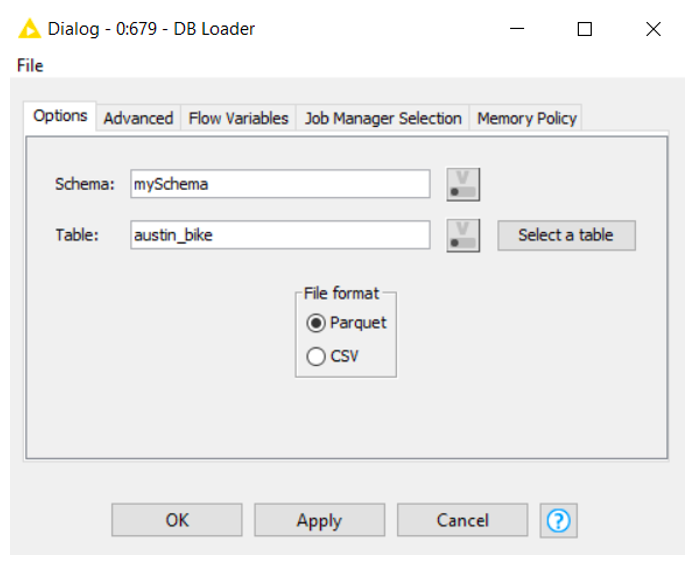

How to Configure the DB Loader Node

In the configuration window insert the schema and the name of the previously created table. Still, for our example, we filled in “mySchema” as schema name and “austin_bike” as table name.

If all the steps are correct, executing this node will copy all the table content into our project schema on Google BigQuery.

Finito!

(This article was originally published on the KNIME blog.)