Click to learn more about author Mike Brody.

Let me be the first to acknowledge that agility is important. BI product’s should be predicated on the idea that end users should have direct control over their own data. I believe that it’s better for business professionals to answer their own analytical questions than to inundate IT staff with reporting requests, creating that growth-stunting IT bottleneck we’ve all encountered at one point or another.

It’s in large part because I know what it takes to manage a self-service analytics environment that I want to take a closer look at the risks around self-service data preparation and how providers are addressing them. It’s critical that consumers have a clear understanding of the technology and its pitfalls before vetting self-service data preparation solutions.

Self-service data preparation, also sometimes referred to as “self-service ETL,” is to data warehousing what self-service analytics is to canned reports: a do-it-yourself solution that circumvents IT resources for quicker insights. Sounds pretty good, right? If business users lose nothing by writing their own reports, where’s the harm in allowing them to ETL their own data?

The danger is in the degree of freedom self-service ETL permits. In a 2015 report titled Embrace Self-Service Data Preparation Tools for Agility, but Govern to Avoid Data Chaos, Gartner warns that these “self-service capabilities can introduce multiple versions of the truth, increase errors in reporting and leave companies exposed to inconsistent information.” Whereas self-service analytics offers agility within the confines of a data warehouse (DW) designed and maintained by IT professionals, self-service data preparation bypasses even that by enabling end users to connect to raw data – data that may or may not already be part of a centralized DW – transform it as their purposes require, and then load that newly created data set into a shareable repository.

This raises two areas of concern: Data Quality and security. First, giving end users this kind of leverage effectively undermines the core benefit of having a data warehouse in the first place. The goal of a DW (and any concomitant data marts) is to eliminate data silos in favor of a single source of truth, and democratizing ETL appears to threaten that Data Quality standard. Conflicting data sets lead to confusion over even basic definitions, such as what qualifies as a customer or a lead. If half a sales team is operating according to one DW-based definition of a lead and the other half is using another ad-hoc-ETL-based definition, the company at large could be bleeding revenue as a result.

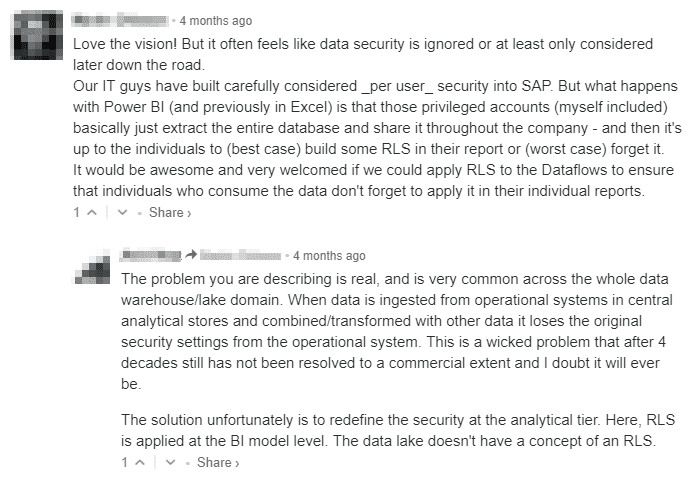

Second, how are companies utilizing these self-service ETL tools ensuring that their data doesn’t end up in uncredentialed hands? Are business users expected to apply row-level security (RLS) as part of their ETL process? RLS logic can be highly complex and variable, presenting management challenges to even those with specialized training, as illustrated in the conversation below. With the proliferation of increasingly stringent regulations around data privacy like the GDPR, the cost of a data breach could be astronomical.

Above: PowerBI’s recent unveiling of its self-service data preparation tool Dataflows is met with concerns regarding row-level security (RLS).

Risks aside, let’s assume for the moment that self-service data preparation isn’t going anywhere. To my mind, it’s futile to try to quash market demand for this technology, so the question then becomes how to deploy it safely. What are those of us providing these solutions doing to furnish the security and governance needs of the companies and organizations we serve?

Let’s start with security. One thing self-service data preparation tool providers are doing to keep sensitive information safe is to give business users access only to raw data they have clearance to see (Method 1 in the diagram below). This means implementing row-level security before users obtain access, not after; the data would otherwise be left raw for users to shape as they will.

Alternatively, a tool might allow IT administrators to build an intermediary security layer that automatically filters the data users retrieve before they ETL (Method 2). Only end users with permission to bypass the security layer would have unmitigated access to the full, raw data set. Both of these methods assume the data preparer doesn’t have top-level clearance, but in an environment where all preparers do, a pre-configured but modifiable security layer could filter the ETL’d data as other users retrieve it (Method 3).

Above: A diagram of possible self-service ETL security methodologies with vertical dotted lines representing RLS or other security implementations.

Methods 1 and 2 both secure the data before it ever reaches the ETL author, but Method 2 has the benefit of having fewer steps and therefore being more efficient. In either case, an ETL author would only have the ability to share data with users of an equal or greater clearance level. Method 3, by contrast, puts the onus on either the ETL author or perhaps on the BI layer to implement RLS; the conversation above suggests this may be PowerBI’s method. Paxata, by contrast, relegates security tasks to IT organizations within a company, which sounds more like Method 2. Simply thinking about self-service data preparation security in these terms might help organizations vet potential solutions and determine which best meets their needs.

This of course still leaves the single-source-of-truth issue. If a large enough group of business users are relying on an ad hoc data set for an extended period of time, it might belie a shortcoming in the centralized DW. Popular data sets, if monitored, could become candidates for integration into the organization’s core warehouse. Conversely, seldom-used data sets could be archived if left untouched for a specified period of time, helping manage the enterprise’s data inventory and conserve space. Facilitating this requires some built-in monitoring/auditing functionality as well as automated metadata file generation.

Even more important than continually innovating on the central DW, however, would be to giving consumers of self-service ETL’d data some indication of where that data came from. It should be obvious that a data set was curated, and each ETL’d data set should be tagged with lineage and provenance metadata — author, creation date, clearance level, primary sources, transformations, etc. — so that its consumers have some insight into how it might differ from the data they typically use and, if needed, approach the author with semantic questions. ETL processes like this one, in which a major healthcare company extracts data fields from a static PDF, should at least be made transparent to those ultimately consuming the data.

Both self-service analytics and self-service data preparation should be deployed with care, but the latter deserves some extra scrutiny for the unparalleled freedoms it affords end users. Organizations entertaining the adoption of a self-service data preparation solution should closely consider whether, how, and to what extent these solutions safeguard against data security and quality risks before taking the plunge.