Click to learn more about author Roland Bullivant.

Data Catalogs are a fast-growing critical component of an Enterprise Information Management strategy. They provide a solution to document, and enable users to find and understand, how data is used across the enterprise data landscape. This is particularly valuable in the context of Data Governance and compliance, Self-Service Business Intelligence and Analytics as well as Data Management and lineage projects.

To deliver an effective Data Catalog however requires the ability to locate and provision it with metadata from a variety of sources. Metadata provides users with vital information about the meaning of the data held in their IT systems.

As an indication of the potential size and importance of the Data Catalog market, research by Stratistics MRC estimate that its value will grow at a CAGR of 25.8% between 2017 and 2026 to almost $1.6B annually.

The significance of Data Catalogs to the success of a Data Management strategy is emphasised in a 2017 Gartner report, Data Catalogs are the New Black in Data Management and Analytics. In it they suggest that “Data Catalogs enable data and analytics leaders to introduce agile information governance, and to manage data sprawl and information supply chains, in support of digital business initiatives.”

Data Catalogs provide both a place to store information about an organisation’s data assets as well as mechanisms for utilising, enriching, managing and valuing that information. The role of metadata is emphasised in Eckerson Group’s The Ultimate Guide to Data Catalogs.

“Metadata is the core of a Data Catalog. Every catalog collects data about the data inventory and also about processes, people, and platforms related to data. Metadata tools of the past collected business, process, and technical metadata, and Data Catalogs continue that practice.”

Enterprise Data Landscapes

Most large organisations have wide variety of applications, file stores and home-grown systems that they have acquired over the years, and which contain vital business data. While some pioneering businesses have attempted to optimise their understanding and use of data in the past, it is only relatively recently that trying to document and categorise this data has become more mainstream. Much of this is driven by increasing compliance requirements (e.g. the EU GDPR) as well as by the more widespread acceptance that data is an asset that can be optimised.

Rather than containing actual data, a Data Catalog delivers value by providing a mechanism for technical and business users alike to make use of the metadata, or data structures which underpin their source systems.

Identifying Metadata

One of the early tasks during the implementation of a Data Catalog is to identify the sources of that metadata and to start importing it. Any good catalog solution has a range of scanners and connectors for identifying and mapping metadata from many different sources, which can be incredibly diverse, as Eckerson Group make clear:

“The initial build of a Data Catalog typically scans massive volumes of data to collect large amounts of metadata. The scope of data for the catalog may include any or all of data lakes, data warehouses, data marts, operational databases, and other data assets determined to be valuable and shareable. Collecting the metadata manually is an imposing and potentially impossible task.”

A Data Catalog solution automates much of the effort required to collect data, by using a blend of scanners, Machine Learning and algorithms etc. These enable metadata to be extracted, data conflicts to be exposed, business terms and meaning to be inferred as well as the tagging of data for privacy, compliance and search.

There are also a variety of other more bespoke methods of capturing metadata, for example via crowd sourcing or catalog curator-based activities.

Challenging Data Sources

However, there are also various classes of system whose valuable metadata is not accessible or usable by those normal methods. Sometimes known as ‘challenging data sources, these include the large, complex and often highly customised ERP and CRM packages from SAP, Oracle, Microsoft and similar vendors. These ERP and CRM packages hold large amounts of transaction data critical to the success of many thousands of businesses, so it is vital that their metadata should be included in a Data Catalog.

The core of the problem here is that there is no meaningful metadata, such as ‘business names’ and descriptions for tables and attributes, and no table relationships defined in the database system catalog. The result is that even if a Data Catalog scanner reads the schema of the application database, the results are of little value.

The same goes for organisations with large Salesforce landscapes, that often find this a hidden challenge when attempting to identify or share relevant metadata about their systems with solutions such as Data Catalogs.

In addition, the tables and attributes contained within application databases are so numerous as to potentially overwhelm Data Catalog users with an excess of metadata which is meaningless without business descriptions, relationships etc.

For example, being able to import the database schema from the system catalog of an SAP system of over 90,000 tables and 1,000,000 attributes with no ‘business names’, descriptions and relationships into a Data Catalog provides users with nothing to help them navigate and understand the metadata. New recent research highlights this challenge.

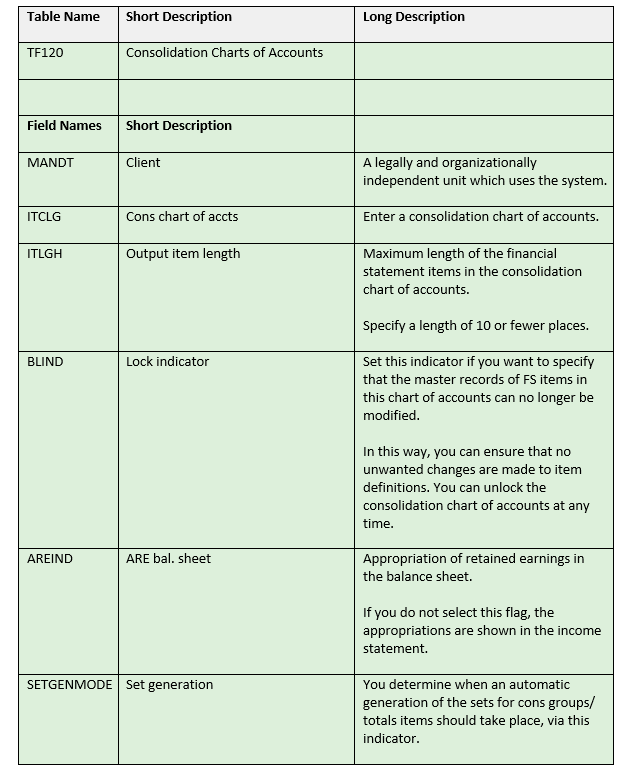

To illustrate the point how would a typical Data Catalog user know what this SAP table TF120 contains from this information?

Of course, if you have multiple instances of an ERP such as SAP and the data models are not exactly the same across instances then the problem of understanding the metadata in the Data Catalog is compounded still further. Salesforce customers seem to experience this proliferation of instances quite regularly – in one example, a client organisation had 50 separate Salesforce ‘orgs’.

An Alternative Approach

The solution to this conundrum is a process that can access and extract the rich metadata from wherever it resides, and which then enables the selection of relevant metadata based on the needs of the business for subsequent import into the Data Catalog.

The ability to ensure a more a comprehensive and inclusive Data Catalog solution that is populated with rich metadata from these complex systems is important. Organizations need a unique, specialist Self-Service Metadata Discovery product for ERP and CRM packages.

To take the previous example, Table TF120, it makes much more sense when the user can see surrounding context, like this:

In short, as more and more businesses seek to extract the hidden value from their data, they are seeking Data Catalog solutions. However, it is also essential to plan ahead when beginning the hunt for a suitable product vendor. A full audit of existing systems is an essential starting point to ensure the final solution is compatible.

Top tips when implementing a Data Catalog:

- If you have one or more ERP or CRM solutions whose metadata should be included, ask the vendor how they propose to achieve this in a cost effective and timely manner.

- Check how detailed and complex metadata can be accommodated by any one solution – once the selection process has ended it is too late!

- Be wary of solutions that involve techniques such as using the package vendors own tools, crowdsourcing, engaging internal specialists or external consultants, or even using prebuilt templates. Although these all offer some degree of value, they also increase project time and costs, as well as delivering varying levels of accuracy as well as physical activity of mapping and importing the metadata into the catalog.