Click to learn more about author Gilad David Maayan.

Click to learn more about author Gilad David Maayan.

Data deduplication, or “dedupe” for short, is a compression technique that aims to remove duplicate information from a dataset. Deduplication frees up a lot of storage particularly when it is performed over large volumes of data.

How It Works

At a high level, the deduplication process works using software that eliminates redundant data and storing only unique first instances of any data. Any other copies of the same data found by the software are removed, and in their place will be a pointer or reference to the original copy. The deduplication process is transparent both to end users and the applications they use.

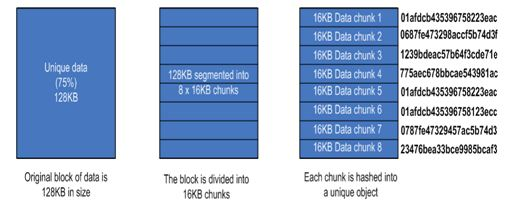

Drilling down into the process more, deduplication software typically generates unique identifiers for data using cryptographic hash functions. Deduplication at the file level is inefficient because even if a minuscule part of a file is altered, such as a single bit, a whole new copy of that file will be stored.

Image Credit: Computer World

Modern deduplication software normally operates at block-level or even bit-level. In these cases, the software assigns identifiers to chunks of data or data bits. When a file is updated, only the new data blocks or bits will be saved instead of replicating an entire file.

Where Deduplication Happens

It’s important to note that data deduplication is only really necessary for implementation in secondary storage locations where cost-efficiency is the main concern. Secondary locations are used for backing up data, and they tend to contain much more duplicate data.

Primary storage locations, such as an on-premise data center used for production workloads, prioritize performance over anything else. The overhead from data deduplication can negatively impact performance, so dedupe is often avoided.

In general, there are two locations where data deduplication can occur:

- Source-based deduplication happens at the client level, and duplicate data blocks are removed from data before it’s synced to a backup location. This approach reduces the bandwidth used when syncing data to backup locations but it comes with a performance overhead.

- Target-based deduplication happens within special hardware, typically located remotely, which acts as a bridge between the source systems and the backup servers. The use of specialized hardware improves deduplication performance, particularly for large datasets in the order of many terabytes, however, much more bandwidth is used to transmit data.

Data Deduplication and The Cloud

Cloud Computing has emerged in recent years as an excellent candidate to serve a wide range of enterprise IT functions. The Public Cloud, wherein vendors provide a range of compute, storage, and infrastructure services accessible via an Internet connection, is a cost-effective, scalable option for backing up and archiving data.

Enterprises can use the Public Cloud to get on-demand storage with no upfront capital expenditure, which explains its growing popularity. Enterprise adoption of the Public Cloud increased to 92 percent in 2018 from 89 percent in 2017. However, one major issue with Public Cloud storage is the hidden costs that often transpire once the enterprise begins backing up data to the Cloud.

The majority of Public Cloud vendors charge for data storage per gigabyte stored. Data deduplication techniques form an important of reducing Cloud costs by virtue of reducing the volume of data backed up to the Cloud systems (see this article by NetApp for more details). At a 25:1 compression ratio, you can fit 250 GB on 10GB of disk space with data deduplication. Over large datasets, compression savings could amount to thousands of dollars.

It’s important to note that relying on the Cloud vendor to deduplicate data for you will not lead to cost savings. If you send 30 gigabytes to the vendor’s systems for backing up, and the company compresses that to 3 gigabytes, you’ll get charged for the full 30 GB. End customers don’t receive exposure to the deduplication capabilities of Cloud providers.

Wrap Up

Data deduplication is essential for modern enterprises to minimize the hidden costs associated with backing up their data using Public Cloud services. Inefficient data storage on its own can become expensive, and such issues are compounded in the Public Cloud when you factor in creating multiple copies of single datasets for archiving or other purposes.

Perform data deduplication before sending data to the Cloud to reduce Cloud costs and avoid unwanted surprises in your monthly bills.

Photo Credit: Pixabay