Do you use Siri on your iPhone, Amazon Echo or the automated voice response system to check your account balances? Have you seen the Nationwide commercial “Impersonal” that shows a customer speaking into his smartphone microphone the word “representative” to be connected to a customer service representative. The automated system does not recognize his request. He spends several minutes trying to reach a customer service representative. These are all examples of Big Data using Natural Language Processing and Speech Recognition technology also known as automated voice response or interactive voice response. They all fall under the realm of Artificial Intelligence (AI).

Do you use Siri on your iPhone, Amazon Echo or the automated voice response system to check your account balances? Have you seen the Nationwide commercial “Impersonal” that shows a customer speaking into his smartphone microphone the word “representative” to be connected to a customer service representative. The automated system does not recognize his request. He spends several minutes trying to reach a customer service representative. These are all examples of Big Data using Natural Language Processing and Speech Recognition technology also known as automated voice response or interactive voice response. They all fall under the realm of Artificial Intelligence (AI).

Great advances have been made in the Artificial Intelligence industry, but there is still a long way to go. Natural Language Processing technology or cognitive technology broadens the power of information technology to perform tasks traditionally performed by humans such as account status, order status, performing transactions, and queries. Such technologies help companies improve the quality of services, reduce response time for customers, and reduce costs.

“We have the ability to extract data, analyze it, find the answer or complete a task and present it back to the user in an understandable format in a matter of seconds,” said Tim Tuttle, Ph.D., the Chief Executive Officer at Expect Labs, while speaking at the DATAVERSITY® Smart Data 2015 Conference. Mr. Tuttle asserted that Mind Meld has a distinct advantage. Mind Meld clients use technology to create voice and natural language interfaces for a wide variety of devices. Currently 1600 companies are using the Mind Meld platform. Mind Meld can create a voice experience in weeks or days, instead of years.

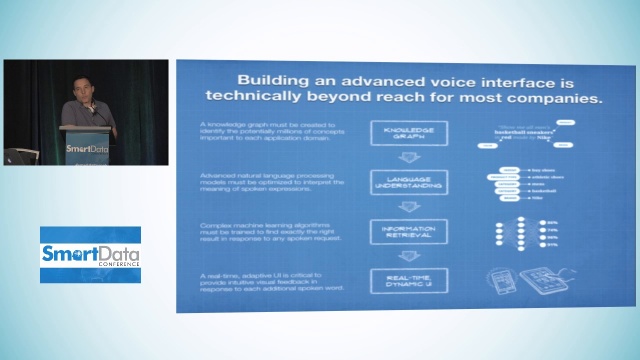

Tuttle said, for companies looking to build from scratch it takes a 1-2 year development cycle and is beyond the reach of most companies because they do not have the technical expertise to build the interface themselves. Mind Meld solves this problem. Mind Meld uses cutting edge voice applications and voice interfaces where the intelligence and understanding is customized around that dataset that matters most to customers.

How are voice and natural language going to transform over the next 5 years?

Unfortunately, building an advanced voice interface technology is beyond the grasp for most companies due to lack of expertise. However, the Mind Meld advanced Artificial Intelligence technology influences a new generation of intelligent voice interfaces for both voice and natural language.

Why is everybody excited about this space?

There have been recent Artificial Intelligence breakthroughs that have tackled the issue on voice interfaces and natural language. Improvements made in the past two years that have overshadowed all the improvements made over the past thirty years combined.

Tuttle said, continual AI breakthroughs within the next 18 months will result in machine speech recognition that will surpass humans for the first time since the dawn of AI. According to most AI scientists, within next year there will be machine speech recognition systems for English that are better than humans are at recognizing speech. We will live in a world where voice becomes critical to have rich interactions with applications and with devices. Big companies have now invested in this technology and now make it free as part of every O/S. If you are a developer and want to take advantage of speech recognition it is already built into the O/S you are using. You now have a new tool you can use to develop applications.

Who uses Speech Recognition?

As of June 2015, Apple performed over 1 billion speech recognition queries per week. For Google, 10% of global search traffic is coming from voice queries. This shows a massive shift in consumer behavior. It is predicted that in less than five years over half of all searches will be performed using voice.

Tuttle said, “you will have the option to use voice because voice will be faster than typing in data or using keyboards.” Voice will be primary way to extract information because it is faster, easier and you do not need a special user interface when using speech technology because everyone knows how to talk to people.

According to Tuttle:

“A frictionless voice experience will delight users, increase engagement, and drive conversions. Previously voice has been only a feature people used when their hands were occupied because there was no other option. This is not going to be the case going forward. Voice becomes the faster way to find information and discover tasks.”

In the video, Tuttle showed a demo of how to use voice technology to find a product on the internet. It took 3 seconds to search by voice and took 15 seconds to search by touch. Voice is faster than touch for shopping and is going to become the dominant way to find answers daily.

Where is the opportunity for other types of applications?

Mind Meld takes the same state of the art technology invested in smartphones and makes it available to other companies.

Tuttle stated:

“We envision seeing it living on every device, in every home, in every office. Many companies will want to create their own voice driven intelligence and many will use our platform to create their experience.”

What is the Challenge?

This technically is beyond the reach for most companies because it requires building a superior Artificial Intelligence interface for your applications. There are four steps required to implement advanced voice interfaces: Knowledge Graph, Language Understanding, Information Retrieval, and Real-time Dynamic User Interface.

A knowledge graph must be created to identify the potentially millions of concepts important to each application domain. Then you create natural language models that can answer any question a user can ask. Users can ask any permutations of questions and you have to answer them all. Once you are able to find the right answer, you need to present the answer that is almost instantaneous so the user is able to accomplish their task by just using the smart device.

What’s Next?

This technology would greatly help drivers and make their lives easier without distracting them. Another example would be to find a missing ingredient for a recipe you are making in the kitchen without having to take use your hands to search for the information on a smart device. More examples include wearables that have small screens and no microphone that can have interactions using voice.

There will be products similar to Amazon Echo, so that no matter what room you are in you can book a hotel room, make a restaurant reservation, and reserve a taxi from your couch. Tuttle said, “we will make systems work better and better.”

Here is the video of the Smart Data 2015 Conference Presentation: