Click to learn more about author Alex Paretski.

Statistics as a branch of applied mathematics plays an important role in identifying hidden patterns in data. That’s why it is frequently used interchangeably with broader terms such as Data Science, Data Analytics, Business Analytics, and Machine Learning. Not only is this comparison technically incorrect, but it also leads to over-simplification of Data Science projects to some shiny new algorithm that can somehow magically address complex business issues on its own.

Given the time and resource investments involved in Business Intelligence projects, it is important for Managers and Business Executives to clearly understand the anatomy of a typical delivery project, and how statistics fits into the overall scheme of things. We hope that this will allow for better planning, technology procurement, project risk assessment, and resource allocation when undertaking Advanced Analytics projects with potentially far-reaching consequences.

What Constitutes Data Science?

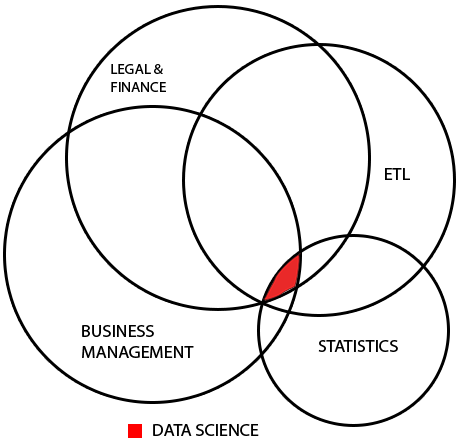

Data Science refers to a collection of practices where principles of Hypothesis Development, Data Modeling, Data Transformation, Feature Engineering, Technical Architecture, and Project Management are used in conjunction with statistical methods to address specific data challenges. The easiest way to understand Data Science is to look at Business Analytics, which is a subset of Data Science as applied specifically to business problems.

Customer churn prediction, serving ads that might get the highest click-through rates, showing next best action based on past purchase history, and detecting procurement fraud before it happens, are all examples of Data Science challenges where statistics is used to glean insights from data. The final solution requires multiple steps including:

- Articulating the specific business challenge

- Gathering the required data

- Transforming this data so that it can be used for modeling (and without sacrificing sample randomness)

- Deciding on the best statistical method to use

- Building the statistical model

- Interpreting results from the model in a way that makes sense for the specific business situation

Operationalizing the model in a production environment.

As can be seen, the Data Science process comprises several steps whereas statistics is just an enabling tool for one of these steps (building the model). Just as are other techniques like Data Warehousing (collecting raw data) and Feature Engineering (preparing the analytics datasets).

Taking an Example

The distinctions outlined above are best brought out with the help of a real-life example. Consider a Marketing Department looking to identify prospects that are most likely to respond to a specific offer. Acquisition budgets are always tight, and it will help to focus on specific channels and tactics for targeting these customers instead of running blanket campaigns with no refined audience targeting.

Looking at this business issue purely from the statistical point of view would involve using some kind of a decision tree model to first identify prospects who responded to a similar offer before and then using the same criteria to score other prospects on the likelihood to convert. Something that can be easily done by a Ph.D. statistician and an SQL developer? After all, the statistician could figure out the best technical algorithm to use while the SQL engineer ran queries to create modeling datasets?

In fact, in many cases, this is indeed all that is budgeted and planned for when Managers embark on Data Science pilots. Based on the discussion above about what constitutes a Data Science project though, even a half-hearted plan to address the original business issue would need to involve numerous business management, legal and, commercial considerations. Let’s quickly see how.

- What exactly is a conversion? Someone signing up for an email newsletter? Signing up for a trial account? Doing a content download? Someone actually making a purchase? What if that signup was a trial and the user canceled without converting into a paid account? What if the user issued a chargeback? Would such records be included in the analysis?

- For decision trees to work, we also need to specify non-converters. How do we identify records who are non-converters? Someone who clicks on an offer but does not make a purchase within the same session? Or within a specified time frame? Never? What if someone has been subjected to multiple contrasting campaigns and he converted for one but not for another?

- All previous campaigns for which conversion data is available were retargeting campaigns. Can we safely apply the results of analysis on users who have already shown purchase intent to users who have never seen our ads before? If not, how do we put together the testing datasets?

- The analytics tools in place only offer an aggregate view of conversion volume and it is currently not possible to get individual prospect level campaign exposure history. Is the cost/time for including this capability justified and in line with the larger technology strategy?

- Say we decide to create a model anyway, and it even turns out to give a high degree of accuracy. The cost of running a highly targeted campaign is high, so can we really afford to waste money on prospects that do not convert? Given the costs and times involved in doing modeling, might we be just better off running broad match campaigns?

- What about skills availability? Vendor certified tool experts (e.g., SAS, SPSS specialists often with minimal grounding in business and data) who like to call themselves Data Scientists but really lack even basic familiarity with acquisition marketing or marketing operations will not be adequate. Do we have access to qualified resources to run the project? Would outsourcing further skew the cost/benefit advantage of having advanced insights?

These are just some of the evaluations that would need to be done in order to successfully apply statistics to solve a business issue as part of a wider Data Science project. For a more in-depth coverage of specific planning steps and due diligence techniques, users are advised to check out the CRISP-DM framework which is the de-facto industry method for delivering business analytics projects.

Summary

As should be obvious even from a very small set of practicalities above, Data Science incorporates a much broader set of considerations to assess the commercial feasibility of analytics investments. The output of statistical modeling may well be a technically precise model but still turn out to be something that may never get deployed due to wider cost/benefit analysis issues.

An all-around business analyst with a strong understanding of company business, data, ETL/Data Warehousing techniques, and statistical concepts, highly skilled Database Architects and ETL Specialists, and qualified project managers are just a few other resources that are needed apart from an ace statistician to turn the promise of Data Science into commercial reality.