Ash Munshi, CEO, and Chad Carson, co-founder of Pepperdata, recently spoke with DATAVERSITY® about the state of Big Data performance and DevOps, what’s on the horizon, and Pepperdata’s role in that future.

Ash Munshi, CEO, and Chad Carson, co-founder of Pepperdata, recently spoke with DATAVERSITY® about the state of Big Data performance and DevOps, what’s on the horizon, and Pepperdata’s role in that future.

Big Data Expansion

Munshi and Carson see lots of companies experimenting with getting into the Big Data space, mostly with consumer-facing data first on the personalization side, because that’s the easiest, most understood option. Munshi said, “We’ve gone from, ‘Let’s try this thing called Big Data;’ to ‘Let’s try some business-critical things in production mode.’”

In the next couple of years, he expects the experimenters to join the companies doing production, and those now doing production to increase workloads significantly:

“A couple years ago our customers [who] had clusters of 30, 40, 50 nodes, they’re now in the hundreds of nodes and actually running production workloads through them – so that’s been the big shift.”

Comcast, for example, started doing experimentation when they became a Pepperdata customer last year, and now, he said, “They’re to the point where they’re doubling the size of their installation.” Munshi says that across Pepperdata’s customer base, companies, “Are routinely increasing the size of the clusters that they’re running on, things are becoming more mission critical, [and are] becoming much more production oriented.”

He sees the other big trend in the computing world as the introduction of Artificial Intelligence (AI). Machine Learning can be applied to large datasets to make split-second decisions because, “Machines can do it much, much, faster.” He sees companies taking more automated actions in the entire DevOps chain, “So that only the critical set of problems are actually in the hands of humans, and everything else becomes sort of automated and synchronized.”

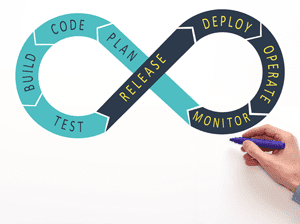

Big Data Performance Lags Behind Devops

According to What is DevOps, the term “DevOps” is defined as a melding of the disciplines of software development and operations. The concept grew out of a workshop at the Agile Conference in Toronto in 2008 and the term was first coined in 2009 by Patrick Debois, because it made a better hashtag than “AgileSystemsAdministrationGroup.” DevOps emphasizes communication, collaboration, and cohesion between the traditionally separate developer and IT operations teams. By integrating these functions as one team or department, DevOps helps an organization deploy software more frequently, while maintaining service stability and gaining the speed necessary for more innovation.

“DevOps implies velocity,” according to Munshi. “Being able to take business requirements and turning them into actionable things that the IT organization or the development organization does and being able to do that quickly – that’s essentially the essence of DevOps. We see a lot of mainstream applications already having DevOps applied to it,”

Big Data still lags behind in several ways though, he said. “Big Data’s infrastructure tends to be different from the classic rest of the IT infrastructure.” He believes that soon, “Both the standard IT plus Big Data are going to come together and a unified DevOps methodology is going to need to be in place.”

Munshi sees production as having three fundamental attributes: reliability, scalability, and performance.

Performance is such an important aspect of production Big Data because, “You’re dealing with complex distributive systems,” he said:

“You’re dealing with not just five, or ten, or even thirty, you’re dealing with hundreds of thousands of machines. You’re not just dealing with megabytes of data; you might be dealing with terabytes and petabytes of data.”

And that data is arriving at a very high velocity. “To top that off you have lots of people who are trying to do interesting workloads on these kinds of things.” It’s a complicated problem to solve when production workloads, analytics workloads, and all processes come together in this space, he said, “And that’s precisely the problem that we take a look at.”

The place where Pepperdata adds a lot of value is in the Big Data performance space, he said. “We help our customers run production nodes. We have over 15,000 of them that we are monitoring every five seconds, collecting over 300 data points from each one of them,” which translates into fifteen million jobs per year. “And so far, we have seen 200 trillion data points from those nodes.”

Munshi sees performance as the most important deliverable. “Our mantra here is ‘Performance can mean the difference between being business critical and business useless, when it comes to Big Data,’”

Catching Up to DevOps

Munshi says that for the DevOps chain to work well with Big Data, “It has to have knowledge about what’s actually going on in the cluster in order for things to make sense in production. So, when we monitor all of the information” that we have access to, “We can go to the operators and ask – ‘Hey, are there resource utilization issues? Are their resource contention issues? Are the jobs running on the right types of nodes?’”

“We can go back to the developers and give them information about the assumptions that were made about data and computing resources when the algorithm was designed,” he said. This process provides an opportunity for production to inform development and makes the entire process more efficient. “We want to be able to take all the data that’s being collected and actually feed it back into every step of the DevOps process,” he said.

“Other companies like Cloudera, MapR, and Hortonworks have tools for some of this stuff, but what we find in our customer base is that those tools don’t provide the fine-grained information that we provide, and they don’t scale.” Because Pepperdata’s products scale to thousands of nodes, Munshi says they can, “Supply the headroom that’s needed, but also the detail, and we can do that simultaneously,” which means customers can use Pepperdata’s tools with existing Big Data software.

Application Profiler

Pepperdata’s most recent offering, Application Profiler, contributes to DevOps by providing targeted, granular, operations information to developers so they can modify apps for maximum efficiency. Users can ensure that jobs are truly ready before moving to production clusters because performance recommendations are clear, detailed, and flexible.

Munshi said, “We’re telling the developer, ‘Hey, you see this job? It breaks down into these particular steps. Steps 5 and 17 are problematic” because they either don’t use resources properly, or use too many resources – information that developers can use to modify code so the application runs at maximum efficiency. “Operators told us [that] developers don’t understand what we are doing,” he said, and the Application Profiler is intended to fill that gap. Built on an open source platform created by LinkedIn called Dr. Elephant, it’s available as SaaS and supports Spark and MapReduce on all standard Hadoop distributions: Cloudera, Hortonworks, MapR, IBM, and Apache, he said.

Pepperdata has three existing offerings that work with the new Application Profiler: Cluster Analyzer, Capacity Optimizer and Policy Enforcer.

Cluster Analyzer

Cluster Analyzer troubleshoots complex Big Data performance problems, tracks jobs, users, and hardware resources, and provides accurate chargeback reports, with custom alerts on 300+ metrics. “We monitor at such a deep level, and have such a deep understanding of the entire Big Data stack,” he said. The powerful combination of data and correlation allows users to troubleshoot, “What’s going on in their production clusters in order of magnitude, so that they can actually understand every single thing that’s going on.” In addition, he said, “Managers can get incredibly fine-grained reports about who is using what resource,” which is useful from a charge-back perspective.

Capacity Optimizer

Capacity Optimizer makes it possible to run more jobs on an existing cluster, run those jobs faster, and reduces hardware footprint. According to Pepperdata, Capacity Optimizer unlocks wasted cluster capacity so users can add up to 50% more work on existing hardware.

Policy Enforcer

Munshi said that certain jobs are important and other jobs are not as important, so with efficient management of workloads, a company can ensure that important jobs aren’t starved of resources, “Policy Enforcer essentially guarantees that.” Policy Enforcer provides tools to ensure on-time execution of critical jobs to meet quality of service commitments, and helps manage the running of ad hoc jobs without impacting critical workloads. Policy Enforcer also supports multi-tenancy, he said.

“At the end of the day, we think performance is absolutely key to DevOps for Big Data in production,” and Munshi believes that Pepperdata’s tools can increase Big Data performance on a grand scale.

Photo Credit: astephan/Shutterstock.com