Click to learn more about author Michael Blaha.

Quality is an underappreciated aspect of data models. The purpose of a model is not just to capture the business requirements, but also to represent them well. A high quality model lessens the complexity of development, reduces the likelihood of bugs, and enhances the ability of a database to evolve. There are both qualitative and quantitative measures of quality.

This is the first of a two-part series. This blog discusses quality for day-to-day operational applications. Next month’s blog will discuss data warehouses.

Qualitative Measures of Quality

Here are some critical factors for a high-quality operational data model.

- Lack of redundancy. A model should strive to store data a single time. Sometimes developers add duplicate data thinking that the database will be more efficient. But that makes it difficult to keep data consistent. You must ensure that every bit of code that touches the data maintains correctness. In practice, oversights are difficult to avoid. Redundancy complicates software and risks errors.

- Use of declaration. Relational databases are declarative—they store both data and its description. So too a data model should be declarative. Do not store codes in a database and rely on programming to decipher them. Rather put the codes and their definitions both in the database.

- Modest size. Projects have many pressures that cause a model to grow. As a counterweight, all projects should have an explicit step to consider model removals. Few applications require more than 100 entity types. Excess content adds time and work. Do not add content because it might be helpful in the future. Sometimes you can simplify a model by making it more abstract.

- Proper abstraction. A data model establishes a balance between a literal representation and less direct ways of thinking. A literal model is easier to understand but has more development work and less flexibility. An abstracted model is harder to understand. Consider abstraction when you can substantially simplify a model.

- No arbitrary restrictions. Avoid arbitrary limits, such as having a different attribute for each kind of phone – office, home, and cell. Such a model will break for new possibilities. Instead you can store each phone number along with its phone number type.

Steve Hoberman has devised a Data Model Scorecard® that covers similar criteria.

Quantitative Measures of Quality

A data model is a graph and you can use mathematics to characterize its complexity. Robert Hillard does so in his book Information-Driven Business. Entity types are the nodes and relationship types are the connections between nodes.

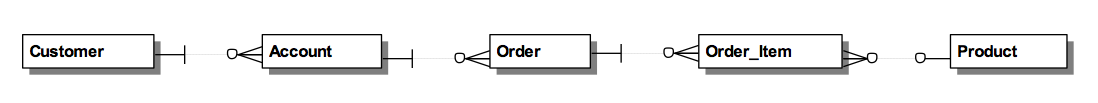

Hillard has two aspects of complexity. One is the traversal length from one node to another. Models with long traversals are more complex, error prone, and costly. For example, the path between Customer and Product in the model below has four traversals. Hillard recommends that the average traversal length in a data model not exceed four.

The other aspect is the average number of edges connected to a node. Nodes with three or more edges offer choices about how to traverse from one node to another. Hillard also recommends that the average degree be less than four.

In Summary

Quality is one of the most important aspects of data models. Your data model must not only satisfy business requirements but must also consider development ease and subsequent maintenance.